Precomputing speeds up cloth imaging

By Bill Steele

Creating a computer graphic model of a uniform material like woven cloth or finished wood can be done by modeling a small volume, like one yarn crossing, and repeating it over and over, perhaps with minor modifications for color or brightness. But the final “rendering” step, where the computer creates an image of the model, can require far too much calculating for practical use. Cornell graphics researchers have extended the idea of repetition to make the calculation much simpler and faster.

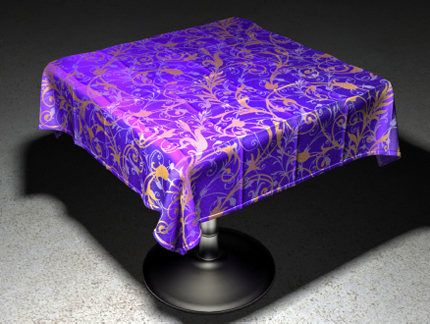

Rendering an image of a patterned silk tablecloth the old way took 404 hours of calculation, according to Kavita Bala, associate professor of computer science. The new method, developed by Cornell graduate student Shuang Zhao in collaboration with researchers at the University of California, Berkeley, and Autodesk, cut the time to about one-seventh of that, and with thicker fabrics, computing was speeded up 10 or 12 times.

The researchers shared their work with the computer graphics community at the 2013 SIGGRAPH conference, July 21-25, in Anaheim, Calif.

Cloth simulations can allow fabric designers to see what the finished product will look like before feeding the design into a loom, Bala said. “You do appearance modeling, then only build it after you're happy with what you see,” she explained. Bala and Steve Marschner, associate professor of computer science, have been working in collaboration with designers at the Rhode Island School of Design. Filmmakers also want good images of cloth, she added.

A computer graphic image begins with a 3-D model of the object’s surface. To render an image, the computer must calculate the path of light rays as they are reflected from the surface. Cloth is particularly complicated because light penetrates into the surface and scatters a bit before emerging and traveling to the eye. It’s the pattern of this scattering that creates different highlights on silk, wool or felt.

The Cornell researchers previously used high-resolution computed tomography (CT) scans of real fabric to guide them in building micron-resolution models, piling together hundreds of blocks per square centimeter to create the complete image. Brute-force rendering computes the path of light through every block individually, adjusting at each step for the fact that blocks of different color and brightness will have different scattering patterns.

The new method precomputes the patterns of a set of example blocks – anywhere from two dozen to more than 100 – representing the various possibilities. These become a database the computer can consult as it processes each block of the full image. For each type of block, the precomputation shows how light will travel inside the block and pass through the sides to adjacent blocks.

In tests, the researchers first rendered images of plain-colored fabrics, showing that the results compared favorably in appearance with the old brute-force method. Then they produced images of patterned tablecloths and pillows. Patterned fabrics require larger databases of example blocks, but the researchers noted that once the database is computed, it can be re-used for numerous different patterns. The method could be employed on other materials besides cloth, the researchers noted, as long as the surface can be represented by a small number of example blocks. They demonstrated with images of finished wood and a coral-like structure.

The research was supported by the National Science Foundation and the Intel Science and Technology Foundation for Visual Computing, with equipment donated by NVIDIA, Adobe and Pixar.

Media Contact

Get Cornell news delivered right to your inbox.

Subscribe