Maybe robots should, like, hedge a little

By Bill Steele

Before long, robots will be giving us helpful advice, but we don’t want them to be snippy about it. Research at Cornell and Carnegie Mellon universities suggests that if they sound a little less sure of themselves and throw in a few of the meaningless words humans are fond of, listeners will have a more positive response.

“People use these strategies even when they know exactly,” explained Susan Fussell, associate professor of communication. “It comes off more polite.”

The study, “How a Robot Should Give Advice,” was conducted at Carnegie Mellon while Fussell was teaching there, and reported at the Eighth Annual ACM/IEEE Conference on Human-Robot Interaction, March 3-6, 2013 in Tokyo. Co-authors are Sara Kiesler, the Hillman Professor of Computer Science at Carnegie Mellon, and former graduate student Cristen Torrey, now at Adobe Systems.

Giving advice can be tricky, because it can be seen as “face-threatening.” You’re saying in effect that the advisee is not smart. The best approach, the researchers suggest, is for the adviser not to claim to be all that smart either, and to use informal language. In a series of experiments, human observers saw robots and humans as more likeable and less controlling when they used hedges like “maybe,” “probably” or “I think,” along with what linguistic specialists call “discourse markers” – words that add no meaning but announce that something new is coming up: “You know,” “just,” “well,” “like” and even “uhm.”

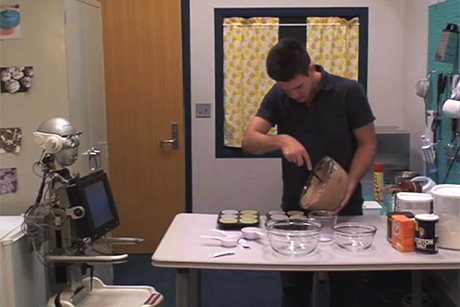

The researchers videotaped novice bakers making cupcakes while a helper gave instructions. To compare reactions to robot and human helpers, they made duplicate videos in which a robot was superimposed over the image of the human helper – but keeping the original soundtrack. The human helpers were actors working from scripts that either used straightforward instructions or included various combinations of hedges and discourse markers. A series of subjects watched the videos and filled out questionnaires describing their reactions to the helpers. They were asked to rate the helpers on scales of consideration, controlling and likability, then write a free-form description of the video.

Both humans and robots were described more positively when they used hedges and discourse markers, and robots using those strategies actually came out better than humans. One possible explanation for that, the researchers said, is that people are surprised to hear robots using informal language. “The robots need to seem human, so they may say things that don’t seem normal when a robot says them,” Fussell said. Psychologists have shown that such “expectancy violation” can produce a stronger reaction.

Another surprise was that while hedges alone or discourse markers alone made the helpers more acceptable, combining both strategies did not improve their score.

The ultimate message, the researchers said, is that designers of robots that deliver verbal advice should use language that makes the recipients more at ease. Along with the use of hedges and discourse markers, they noted, the literature of communications research may provide other helpful strategies.

And all this may be good advice for human communicators as well. Perhaps with that in mind, the researchers whimsically end their paper with “Maybe, uhm, it will help robots give help.”

The research was supported by the National Science Foundation.

Media Contact

Get Cornell news delivered right to your inbox.

Subscribe