Crash, bang, rumble! Bringing noise to virtual worlds

By Bill Steele

When you kick over a garbage can, it doesn't make a pure, musical tone. That's why the sound is so hard to synthesize.

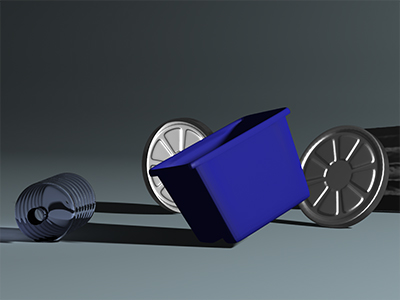

But now Cornell computer scientists have developed a practical method to generate the crashing and rumbling sounds of objects made up of thin "harmonic shells," including the sounds of cymbals, falling garbage cans and lids, and plastic water-cooler bottles and recycling bins.

The work by graduate students Jeffrey Chadwick and Steven An and Doug James, associate professor of computer science, will be presented at the SIGGRAPH Asia conference in Yokohama, Japan, in December.

As virtual environments become more realistic and immersive, the researchers point out, computers will have to generate sounds that match the behavior of objects in real time. Even in an animated movie, where sound effects can be dubbed in after the fact from recordings of real sounds, synthesized sounds can match more realistically to the action. So the goal is to start with the computer model of an object already created by animators, analyze how such an object would vibrate when dropped or struck, and how that vibration would be transferred to the air to radiate as sound.

When a thin-shelled object is struck or falls, the metal or plastic sheet slightly deforms and snaps back, triggering a vibration. To simulate the deformation, the computer divides the shell into many small triangles and calculates how the angles between triangles change and how much the sides of the triangles are stretched. What makes this difficult is that the shell vibrates in several different ways at once, and these modes of vibration are "coupled" -- energy transfers from one to another and back again. Previous methods of sound synthesis for shells did not take this into account, James said, and the result was a clean, clear sound, appropriate for bells and wind chimes, but not for things that crash and rumble.

The calculation must be stepped through time at audio frequencies, in this case seeing how the object will look every 1/44,100 of a second. Time-stepping a large mesh of triangles would take weeks of computer time, so the researchers approximate the response by sampling a few hundred triangles (out of thousands) and interpolate between them, a process they call "cubature."

The final step is to map out how the sound waves radiate to determine how the event will sound to a listener at any particular location. Calculating how vibrations of the object move the air is a standard, off-the-shelf process used by engineers who design real-world objects (a lot of work goes into making machinery quieter), but it's too slow for sound synthesis, so the radiation model is pre-computed to save time.

Even with these refinements, the system is not ready for real time, James reported. The computations for simple demonstrations still take about an hour on a laptop computer.

"There's some hope that we can speed this up," he said, "by making other approximations." Nevertheless, he said, previous methods of generating these sounds could take weeks, "but now we can do it in hours."

The work on thin shells is part of a larger project in James' lab to synthesize a variety of sounds, including those of dripping and splashing fluids, small objects clattering together and shattering glass.

The research is funded by the National Science Foundation and the Alfred P. Sloan Foundation, with donations by Pixar, Intel, Autodesk and Advanced CAE Research.

Media Contact

Get Cornell news delivered right to your inbox.

Subscribe