Physicists unify the structure of scientific theories

By Anne Ju

Cornell physicists have figured out why science works. Or rather, they’ve posited a theory for why scientific theories work – a meta theory.

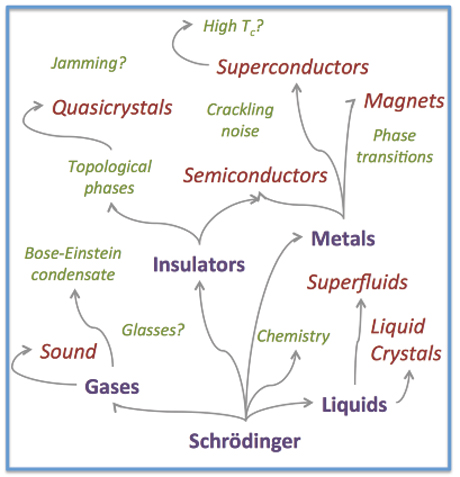

Publishing online in the journal Science Nov. 1, a team led by physics professor James P. Sethna has developed a unified computational framework that exposes the hidden hierarchy of scientific theories by quantifying the degree to which predictions – for example, how a particular cellular mechanism might work under certain conditions, or how sound travels through space – depend on the detailed variables of a model.

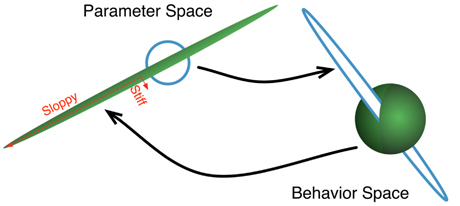

They find that in an impossibly complex system like a cell, only a few combinations of those variables end up predicting how a system will behave. The collective behavior of such systems depend on a few “stiff” combinations of rules; most of the other details in the system they call “sloppy” – relatively unimportant when it comes to how the entire thing works.

Now they have applied their tools to physics theories, and found a similar division into stiff and sloppy: The former, stiff rules, comprise the useful information pertaining to a high-level or coarse description of the phenomenon being considered, whereas the latter, sloppy ones, hide microscopic complexity that becomes relevant at finer scales of a more elementary theory. In other words, Sethna says, “We’re coming to grips with how science works.”

“In physics, the complications all condense into an emergent, simpler description,” Sethna said. “In many other fields, this condensation is hidden – but it’s still true that many details don’t matter.”

The concept of emergence has long been known in physics: Complicated systems arise from a series of relatively simple interactions. The theoretical physics team has re-examined some specific models, such as the classical diffusion equation that explains the way perfume disperses in a room, and they studied how their behaviors changed as their parameters were changed using the same tools as they used to study cells.

What the results showed, the researchers say, is that theories of anything from cell behavior to ecosystems and economics do not need to reflect all the details to be comprehensible and correct. A theory need only capture the collective, “stiff behaviors,” they say.

This, in fact, is why theoretical physics works – understanding the details of molecular shapes and sizes is not necessary for a sound wave theory; only factors like density and compressibility matter. Similarly, high-energy physicists need not solve every detail of string theory to predict the behavior of quarks.

“If one needed to extract every detail of the true underlying theory to make a useful theory, science would be impossible,” Sethna said.

The paper, “Parameter Space Compression Underlies Emergent Theories and Predictive Models,” includes work by Benjamin Machta, Ph.D. ’12; Ricky Chachra, Ph.D. ’14; and Mark Transtrum, Ph.D. ’11. The research was supported by the National Science Foundation.

Media Contact

Get Cornell news delivered right to your inbox.

Subscribe